ALTR CEO James Beecham has compared encryption to duct tape. Duct tape is great – it comes in handy when you need a quick fix for a thousand different things or even…to seal a duct. But when it comes to security, you need powerful tools that are fit for purpose.

Today, let’s compare some different methods you could use to secure data – including tokenization vs encryption – to see which is the best fit for your cloud data security.

Tokenization vs Encryption: 3 Reasons to Choose Tokenization

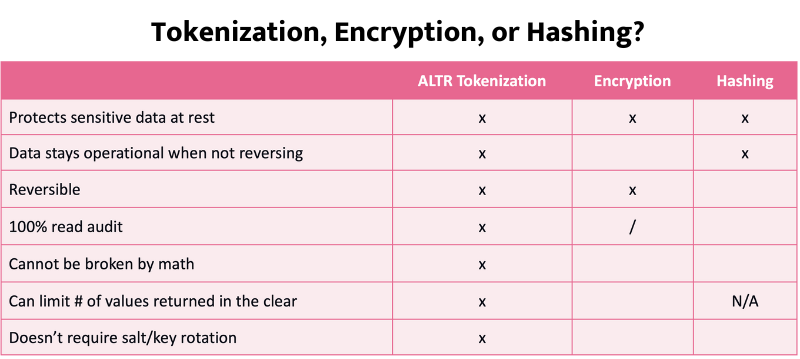

As a data security company, ALTR uses encryption for some things, but when we looked at encryption vs tokenization, we found tokenization far superior for two key data security needs:

- Defeating data thieves

- Enabling data analysis

Companies that want to transform data into business value need both security and analytics. Tokenization delivers the best of both worlds: the strong at-rest protection of encryption and the analysis opportunity provided by similar solutions like anonymization.

3 ways tokenization is superior to encryption:

1. Tokenization is more secure.

It actually replaces the original data with a token, so if someone successfully obtains the digital token, they have nothing of value. There’s no key and no relationship to the original data. The actual data remains secure in a separate token vault.

This is important because we now collect all kinds of information as a society. Companies want to analyze the customer data they hold, whether it’s Netflix, a hospital or a bank. If you’re using encryption to protect the data, you must first decrypt it all to make any use of it or any sense of it. And decrypting leads to data risk.

2. Tokenization enables analytics.

Because tokenization offers determinism, which which maintains the same relationship between a token and the source data every time, accurate analytics can be performed on data in the cloud.

If you provide a particular set of inputs, you get the same outputs every time. Deterministic tokens represent a piece of data in an obfuscated way and give you back the same token or representation when you need it. The token can be a mashup of numbers, letters and symbols, just like an encrypted piece of data, but tokens preserve relationships. The real benefit of deterministic tokenization is allowing analysts to connect two datasets or databases securely, protecting PII privacy while allowing analysts to run their data operations.

3. Tokenization maintains the source data.

Because the connection is two way – tokenization and de-tokenization – you can retrieve the original data in the event if you need it.

Let’s say you’ve collected instrument readings from a personal medical device that I own. If you detect something in that data, like performance degradation, you and I both would appreciate my getting a phone call, an email or a letter informing me I need to replace the device. Encryption would not allow this because once data is encrypted, such as my name or phone number, it disappears forever from the database.

Tokenization vs Anonymization: Limited Analytics Today and Tomorrow

Unlike encryption, anonymization offers some ability to perform fundamental analysis, but is limited by the anonymization data design and intent. Anonymization removes all the PII by grouping data into ranges, like age range or zip code while removing their birthdate and street address. This means you can perform a level of analysis on anonymized data, say on your 18 to 25 years old customers. But what if you wanted a different group or associate that age range with another data set?

Anonymization is permanent and inflexible. The process cannot be reversed to re-identify individuals, which might not give you enough options. If your team wants to follow an initial data run to invite a group of customers to an event or send them an offer, you’re stuck without the phone number or mailing address available. There’s no relationship to the original PII of the individual.

Tokenization vs Hashing: A One-Way Trip

Another data security tool is one-way hashing. This is a form of cryptographic security that uses an algorithm to convert source data into an anonymized piece of data of a specific length. Unlike encryption, because the data is a fixed length and the same hash means the same data, it can be operated on with joins. But a big downside is that it’s (virtually) irreversible. So, like anonymization, once the data is converted, it cannot be turned back into plain text or source data for further analysis. Hashing is most often used to protect passwords stored in databases. You may also hear the term “salting” applied to password hashing. This is the practice of adding additional values to the end of the hashed password to differentiate the value, making the password cracking process much harder. Hashing works very well for password protection but is not ideal for PII that needs to be used.

Encryption, anonymization and one-way hashing, therefore, can be shortsighted moves. Your organization’s success depends on allowing authorized users to access the original data now and in the future, as long as you can track and report on the usage. At the same time, you must also ensure that sensitive data is useless to everyone else.

Tokenization: The Clear Cloud Data Security Winner

When looking at tokenization vs encryption, it’s clear that tokenization overcomes the challenges other data security solutions face by preserving the connections and relationships between data columns and sets. However, tokenization isn’t just a simple mathematical scramble of the original data like encryption or a group of ranges with anonymized data. Authorized analysts can query tokenized data for insights without having access to the underlying PII. The more secure token remains meaningless to any unauthorized user or hacker.

With modern tokenization techniques, you can apply policies and authorize access at scale for thousands of users. You can also track and report on the secure access of sensitive data to ensure compliance with privacy regulations worldwide. You can’t do this with anonymization, hashing or encryption.

When it comes to tokenization vs encryption, tokenization is the more flexible tool for secure access and privacy compliance. This is critical for organizations quickly moving from storing gigabytes to petabytes of data in the cloud. You can feed tokenized data directly from cloud data warehouses like Snowflake into any application. You can do this with complete confidence that all the data, including sensitive PII, will be protected even from the database admin while making it easy for authorized data end-users to collaborate and deliver valuable insight quickly. Isn’t that the whole point?

See how ALTR can integrate with leading data catalog and ETL solutions to deliver automated tokenization from on-premises to the cloud. Get a demo.